The 403 web scraping error code is a common HTTP response for an unfulfilled request. It's often returned when a Cloudflare-protected website recognizes your traffic as automated and denies access to the content.

This is what the error might look like in your terminal:

HTTPError: 403 Client Error: Forbidden for url: https://www.g2.com/products/asana/reviews

Fortunately, you can overcome it with seven actionable techniques. Keep reading to see how to implement them:

- Scraping API to bypass error 403 in web scraping.

- Set a fake User Agent.

- Complete your headers.

- Avoid IP bans.

- Make requests through proxies.

- Use a headless browser.

- Use anti-Cloudflare plugins.

Although the code examples in this article will be in Python, the principles can be adapted to suit your preferred language, for example, Node JS, Java, Ruby, etc.

Seven Easy Ways to Bypass Error 403 Forbidden in Web Scraping

Here are seven ways to avoid the 403 Forbidden error. These solutions will grant you access to your desired data.

1. Scraping API to Bypass Error 403 in Python (or Any Language)

The most effective way to bypass 403 errors in web scraping is using a web scraping API, as it deals with the anti-bot measures for you. Solutions like ZenRows provide all the features necessary to imitate natural user behavior, including JavaScript rendering, premium proxy rotation, geolocation, and advanced anti-bot bypass. This allows you to avoid the 403 Forbidden error and scrape without getting blocked.

Let's see how ZenRows works using a Cloudflare-protected page as the target URL.

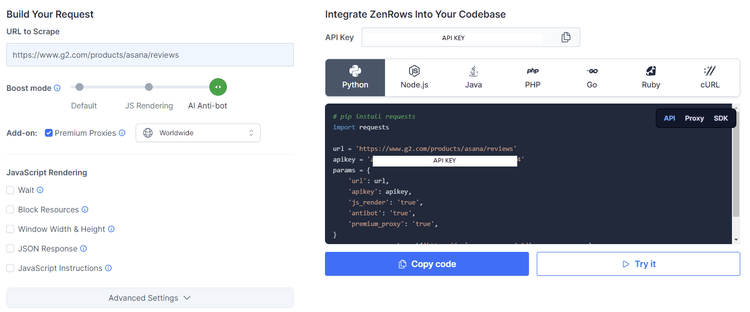

Sign up for free, and you'll get to the Request Builder page:

Input the target URL (in this case, https://www.g2.com/products/asana/reviews), activate the Anti-bot boost mode, and the add-on Premium Proxies.

That'll generate your request code on the right. Copy it, and use your preferred HTTP client. For example, Python Requests, which you can install using the following command:

pip install requests

Your code should look like this:

import requests

url = 'https://www.g2.com/products/asana/reviews'

apikey = 'Your_API_KEY'

params = {

'url': url,

'apikey': apikey,

'js_render': 'true',

'antibot': 'true',

'premium_proxy': 'true',

}

response = requests.get('https://api.zenrows.com/v1/', params=params)

print(response.text)

Run it, and you'll get the following result.

<!DOCTYPE html><head>

#...

<title>Asana Reviews 2023: Details, Pricing, & Features | G2</title>

#..

Awesome, right? ZenRows makes bypassing the 403 Forbidden web scraping error in Python or any other language easy.

2. Set a Fake User Agent

Another way to bypass the 403 Forbidden web scraping error is by setting up a fake User Agent. It's a string sent by web clients with every request to identify themselves to the web server.

Non-browser web clients have unique User Agents that servers use to detect and block them. For example, here's what a Python Requests' User Agent looks like:

python-requests/2.26.0

And here's a Chrome User Agent:

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36

The examples above show how easy it is for websites to differentiate between the two.

Yet, you can manipulate your User Agent (UA) string to appear like that of Chrome or any browser. In Python Requests, just pass the fake User Agent as part of the headers parameters in your request.

Creating a working UA string can get complex, so check out our list of working web scraping User Agents you can use.

Let's see how to set a User Agent in Python by adding the new UA in the headers object, which is used to make the request:

import requests

url = 'https://www.example.com' # Replace this with the target website URL

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

}

response = requests.get(url, headers=headers)

if response.status_code == 200:

print(response.text) # Print the content of the response

else:

print(f'Request failed with status code: {response.status_code}')

For the best results, you must randomize UAs. Read our tutorial on User Agents in Python to learn how to do that.

It's important to note that more than just setting a UA may be required, as websites can identify patterns to block your scraper.

3. Complete Your Headers

When making requests with web clients like Python Requests and Selenium, the default headers don't include all the regular data that websites expect to be included in a user's request. That'll make you stand out as a suspicious request, leading to a potential 403 web scraping error.

For example, these are the Python Requests' default headers:

{

'User-Agent': 'python-requests/2.26.0',

'Accept-Encoding': 'gzip, deflate',

'Accept': '*/*',

'Connection': 'keep-alive',

}

And these are the ones of a regular web browser:

headers = {

'authority': 'www.google.com',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'en-US,en;q=0.9',

'cache-control': 'max-age=0',

'cookie': 'SID=ZAjX93QUU1NMI2Ztt_dmL9YRSRW84IvHQwRrSe1lYhIZncwY4QYs0J60X1WvNumDBjmqCA.; __Secure-

#..,

'sec-ch-ua': '"Not/A)Brand";v="99", "Google Chrome";v="115", "Chromium";v="115"',

'sec-ch-ua-arch': '"x86"',

'sec-ch-ua-bitness': '"64"',

'sec-ch-ua-full-version': '"115.0.5790.110"',

'sec-ch-ua-full-version-list': '"Not/A)Brand";v="99.0.0.0", "Google Chrome";v="115.0.5790.110", "Chromium";v="115.0.5790.110"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-model': '""',

'sec-ch-ua-platform': 'Windows',

'sec-ch-ua-platform-version': '15.0.0',

'sec-ch-ua-wow64': '?0',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'same-origin',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36',

'x-client-data': '#..',

}

The difference is clear. But you can change your headers to look like a regular browser using Python Requests. To do that, define browser headers in the headers object, which is used to make the request, just as you did with User Agents.

import requests

url = 'https://www.example.com'

# Define your custom headers here

headers = {

'authority': 'www.google.com',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'en-US,en;q=0.9',

'cache-control': 'max-age=0',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36',

# Add more headers as needed

}

response = requests.get(url, headers=headers)

if response.status_code == 200:

print(response.text) # Print the content of the response

else:

print(f'Request failed with status code: {response.status_code}')

You can use your browser headers by inspecting any web page, preferably your target website, using a regular browser. Navigate to the Network tab, select any request, and copy the headers. However, for cleaner code and header structure, check out our guide on HTTP headers for web scraping.

4. Avoid IP Bans

Too many requests from the same IP address can result in IP bans. Most websites employ request rate limits to control traffic and resource usage. Therefore, exceeding a predefined limit will get you blocked.

In this case, you can prevent IP bans by implementing delays between successive requests or request-throttling (limiting the number of requests you make within a certain time frame).

To delay requests in Python, set the delay between consecutive requests, specify the total number of retry attempts, iterate through each request, and use the time.sleep() function to pause between requests.

import requests

import time

url = 'https://www.example.com' # Replace this with the target website URL

headers = {

# Add your custom headers here

}

# Define the time delay between requests (in seconds)

delay_seconds = 2

# Number of requests you want to make

num_requests = 10

for i in range(num_requests):

response = requests.get(url, headers=headers)

if response.status_code == 200:

print(f"Request {i + 1} successful!")

print(response.text) # Print the content of the response

else:

print(f'Request {i + 1} failed with status code: {response.status_code}')

# Introduce a delay between requests

time.sleep(delay_seconds)

However, this can only help with a few requests. So, while understanding the concept of rate limiting and IP bans is critical in avoiding the 403 web scraping error response, you also need to implement proxies.

5. Make Requests through Proxies

A more solid technique to avoid IP bans is routing requests through proxies. Proxies act as intermediaries between you and the target server, allowing you to scrape using multiple IP addresses, thereby eliminating the risk of getting blocked due to excessive requests.

The most common proxy types for web scraping are datacenter and residential. The first ones refer to IP addresses provided by data centres, which are easily detected by websites. On the other hand, residential proxies are real IP addresses of home devices and are generally more reliable and difficult for websites to detect.

Check out our guide on the best web scraping proxies to learn more.

To use proxies in Python Requests, add your proxy details to the requests.get() function using the proxy parameter. You can grab some free proxies from FreeProxyList, yet that works for testing only, as you need premium proxies in a real environment.

import requests

url = 'https://www.example.com' # Replace this with the target website URL

# Define your custom headers here

headers = {

# Add your custom headers here

}

# Define the proxy you want to use

proxy = {

'http': 'http://username:password@proxy_ip:proxy_port', # Replace with your proxy details

'https': 'https://username:password@proxy_ip:proxy_port', # Replace with your proxy details

}

response = requests.get(url, headers=headers, proxies=proxy)

if response.status_code == 200:

print(response.text) # Print the content of the response

else:

print(f'Request failed with status code: {response.status_code}')

You can use HTTPS proxies for both HTTP and HTTPS protocols.

You might find useful our step-by-step tutorial on implementing Python Requests proxies. Among others, you'll learn the much-needed practice of rotating proxies per request.

However, like with User Agents, proxies alone may not be enough, so head to the next tip below.

6. Use a Headless Browser

Rendering JavaScript is critical for web scraping modern websites, especially for Single Page Applications (SPAs) that rely heavily on client-side rendering to display content. Moreover, anti-bot measures often throw JavaScript challenges to confirm whether the requester is using a browser like a human does or is a bot.

Attempting to scrape web pages that depend on JavaScript with HTTP request libraries like Python Requests will lead to incomplete data or the 403 web scraping error.

Fortunately, headless browsers like Selenium allow you to render JavaScript and ultimately solve said challenges. Find out more in our guide on web scraping using Selenium with Python.

7. Use Anti-Cloudflare Plugins

As mentioned earlier, Cloudflare-protected websites are the main ones responsible for the 403 Forbidden error that developers get while web scraping. Cloudflare acts as a reverse proxy between you and the target web server, granting and keeping access only to human-like requests.

Using anti-Cloudflare plugins may be enough to bypass Cloudflare in some cases. Some of those include:

- Undetected ChromeDriver: a Selenium plugin that works by shielding Selenium from bot detection mechanisms.

- Cloudscraper: This tool works like the Python Requests library but uses JS engines to solve Cloudflare's JavaScript challenges.

Conclusion

Bypassing 403 Forbidden web scraping errors requires a series of solutions, one on top of the other, like the ones you learned and many others. However, an easy solution to avoid detection in Python is using a web scraping API like ZenRows. To try it out, sign up now to get your free API key.

Frequent Questions

What Is 403 Forbidden while Scraping?

403 Forbidden while scraping is an error response code that means the web server detects your scraping activities and denies you access. That happens because anti-bot systems flag your requests as automated and block them.

What Is Error Code 403 in Python?

Error code 403 in Python is a response that indicates the web server understood the request but refused to fulfill it due to insufficient permissions or bot detection. Websites might impose geolocation restrictions, rate limiting, and IP bans, among others.

What Is Error 403 in Python Scraping?

Error 403 in Python Scraping refers to the HTTP status code Forbidden error that arises when a web server denies your request. Encountering this error when scraping using Python is common due to unique Python libraries' signatures and fingerprints that make them easily flagged by anti-bot measures. For example, its default User Agent and incomplete headers identify themselves to the web server as bots.

How Do I Catch a 403 Error?

To catch a 403 error when scraping with Python, use the try and except blocks. When making a request, first include the try and except framework. Then, include a code block within try and, in the case of a 403 error, the corresponding except block will catch the error, allowing you to implement the bypass techniques.

How Do I Bypass the 403 Error in Web Scraping?

To bypass the 403 error in web scraping, you must emulate natural user behavior because the error is due mainly to anti-bot measures. You can use a web scraping API like ZenRows to abstract the process of mimicking an actual browser while you focus on extracting the necessary data.

How to Bypass the 403 Error in Web Scraping with Python?

To bypass error 403 in web scraping with Python, you can use tools like Undetected Chrome driver and Cloudscraper, specifically designed to bypass anti-bot measures. Additionally, implementing requests delays, using premium residential proxies, headless browsers, and completing headers can contribute to a successful 403 error bypass.

Can You Bypass a 403 Error?

Yes, you can bypass a 403 error by implementing techniques and/or tools that make you appear like a regular user. Strategies like headless browsers, rotating proxies, and User Agents, or using a web scraping API like ZenRows, can help you solve a 403 error.

What Tools to Bypass 403?

Tools to bypass 403 include ZenRows, Undetected ChromeDriver, and Cloudscraper. While plugins are powerful tools designed specifically for bypassing anti-bot measures, they have their limitations and don't work in many cases. Luckily, an all-in-one web scraping API means you don't have to worry about limitations.

How Do I Get Past 403 Forbidden in Python?

You can get past 403 Forbidden in Python by employing methods to mimic an actual browser request. Techniques such as using headless browsers, rotating premium residential proxies, and completing HTTP headers can help you achieve that.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.